GCP Internal LBs , How dirty?

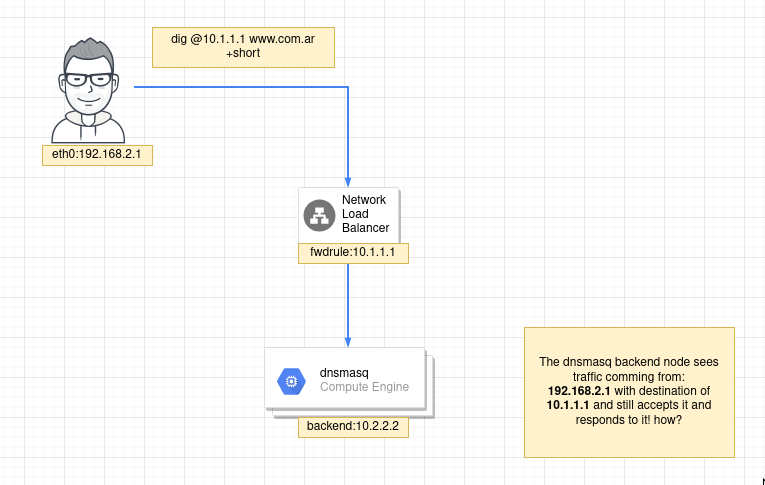

If you ever decide to tcpdump on a node behind an GCP internal Loadbalancer you will be surprised to see that the packets aren’t destined to the interface ip on your VM , the ip headers maintain the destination address of the load balancer , let me draw it:

I have a normal NLB passing traffic to a instance group with a bunch of dnsmasq nodes , nothing out of the ordinary.

The issue is that if you tcpdump on the dnsmasq nodes, you will find that packets aren’t destined to the node , the interfaces aren’t in promiscuous mode and the packets are still accepted and responded:

The dnsmasq node has this address:

root@dnsmasq:~# ip -4 -br a | grep ens4

ens4 UP 10.1.16.238/32

No alias nothing else , now if i tcpdump a request:

11:35:47.356361 In aa:aa:aa:aa ethertype IPv4 (0x0800), length 100: 10.10.1.52.55173 > 10.1.16.240.53: 24391+ [1au] A? [www.pepinos.com](http://www.pepinos.com). (56)

So 10.10.1.52 its the user (me) , i am querying the lb (fwd rule) ip (10.1.16.240) and that traffic is fwd to the dnsmasq instance , so far so good.

But how the dnsmasq instance responds to a packet that isn’t destined to itself?

I’ve looked into the syscalls of dnsmasq and i get pretty much the same:

root@dnsmasq1:~# strace -fffffffff -e trace=recvmsg,sendmsg -p 5454 2>&1 | grep pepinos

recvmsg(4, {msg_name={sa_family=AF_INET, sin_port=htons(53408), sin_addr=inet_addr("10.10.1.52")}, msg_namelen=28->16, msg_iov=[{iov_base="\2F\1 \0\1\0\0\0\0\0\1\3www\7pepinos\3com\0\0\1\0"..., iov_len=4096}], msg_iovlen=1, msg_control=[{cmsg_len=28, cmsg_level=SOL_IP, cmsg_type=IP_PKTINFO, cmsg_data={ipi_ifindex=if_nametoindex("ens4"), ipi_spec_dst=inet_addr("10.1.16.240"), ipi_addr=inet_addr("10.1.16.240")}}], msg_controllen=32, msg_flags=0}, 0) = 56

sendmsg(4, {msg_name={sa_family=AF_INET, sin_port=htons(53408), sin_addr=inet_addr("10.10.1.52")}, msg_namelen=16, msg_iov=[{iov_base="\2F\201\200\0\1\0\2\0\0\0\1\3www\7pepinos\3com\0\0\1\0"..., iov_len=76}], msg_iovlen=1, msg_control=[{cmsg_len=28, cmsg_level=SOL_IP, cmsg_type=IP_PKTINFO, cmsg_data={ipi_ifindex=0, ipi_spec_dst=inet_addr("10.1.16.240"), ipi_addr=inet_addr("20.86.0.0")}}], msg_controllen=28, msg_flags=0}, 0) = 76

So it is clear , the dnsmasq node , has no problem replying to an interface address that it does not own (known maybe as promiscouous mode) , but this box isn’t set to do anything fancy (let alone promiscuous mode)

Ebpf?

Initiall i thought it could be some EBPF rule attached to XDP , rewriting the ip headers , but that should be visible from userland when you strace , and it was not the case ,

ip link|grep xdp -i

returned nothing , I’ve also looked with bpftool and still nothing

Aliases?

No , nothing in the box , the dnsmasq node has only one interface with a single address.

Documentation?

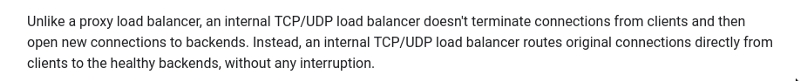

There’s some lazy mentions to what it’s happening but at no point its documented what happens on the instance:

So that’s exactly what we see, the question is how the node responds to a packet that isn’t destined to it.

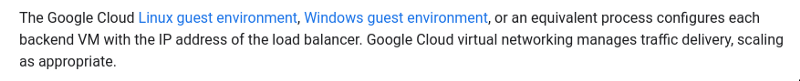

Virtio?

GCP instances come preloaded with a lot of tools and drivers , they use virtio for network drivers and that is a hook where the hypervisor could control some of the traffic comming in:

Creating instances that use the Compute Engine virtual network interface

Internal TCP/UDP Load Balancing overview | Google Cloud

So there’s some data there and if you lsmod or sysctl list-unit-files you’ll see the bloat:

But i still think this is too complex , mangling with drivers and/or ebpf to do something so silly and simple.

Routes:

Routes would be something that you could potentially use to divert traffic to yourself so i started to look at normal places:

root@dnsmasq:~# ip route

default via 10.1.16.1 dev ens4 proto dhcp src 10.1.16.238 metric 100

10.1.16.1 dev ens4 proto dhcp scope link src 10.1.16.238 metric 100

Nothing there , remember that we’re looking for traces of the 10.1.16.240 (which is the address of the loadbalancer)

I remember that there is the fib_trie that also display local rules , but before lets say show me the routes i need to get to 10.1.16.240:

root@dnsmasq1:~# ip route get to 10.1.16.240

local 10.1.16.240 dev lo src 10.1.16.240 uid 0

cache <local>

Wait what? is it a local route? so i can ping you? (this lb is only passing udp traffic)

root@dnsmasq1:~# ping -c1 10.1.16.240

PING 10.1.16.240 (10.1.16.240) 56(84) bytes of data.

64 bytes from 10.1.16.240: icmp_seq=1 ttl=64 time=0.020 ms

Plot thickens , so somehow this route has been injected on the fib_trie:

root@dnsmasq1:~# grep 10.1.16.240 /proc/net/fib_trie

|-- 10.1.16.240

|-- 10.1.16.240

AH!!!!

root@dnsmasq1:~# ip route ls table local | grep 240

local 10.1.16.240 dev ens4 proto 66 scope host

So the local kernel routing is a very special table that is maintained by the kernel and it allows you to set unicasts or ip aliases (http://linux-ip.net/html/routing-tables.html)

So that’s it , But who triggers this ?

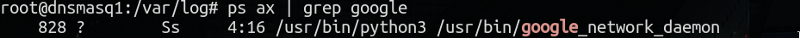

Im not gonna go a long way about this , but:

They’ got a daemon on your instance:

Here’s the repo:

GoogleCloudPlatform/compute-image-packages

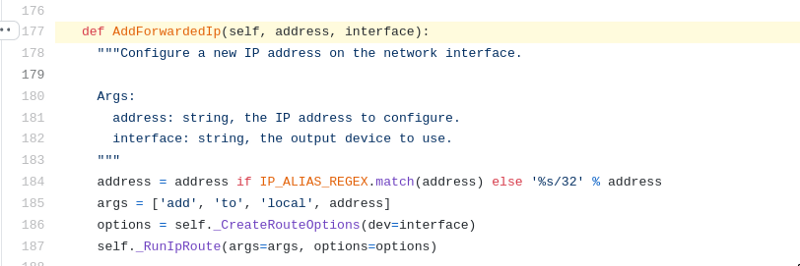

And more importantly here’s the magic:

Look at the args … sounds familiar ? it’s running ip route!

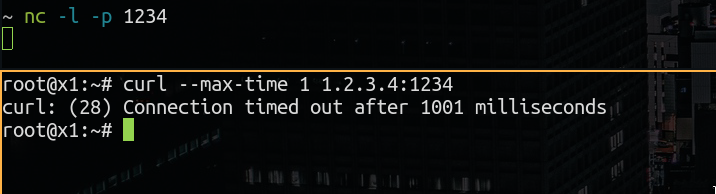

Let’s test this in a local env!

That won’t work obviously , i don’t own the ip 1.2.3.4 (i wish i do)

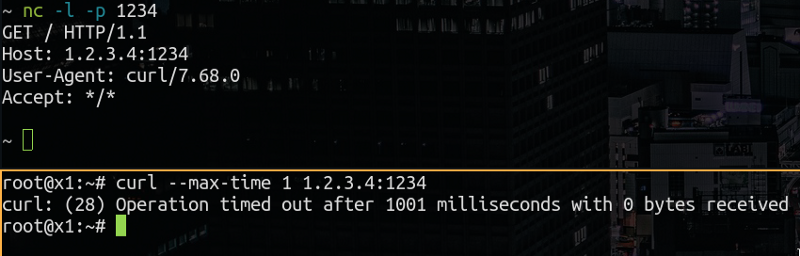

But let’s do what the daemon does by hand!

ip route add local 1.2.3.4 dev lo

perfect:

root@x1:~# ip route ls table local | grep 1.2.3.4

local 1.2.3.4 dev lo scope host

And now!

that’s the mystery solved!